AI Can Write Your Paper, But Should You Let It?

An increasing number of academic papers are being written with AI. This transformation of the research process throws into question the very role of a researcher, for now and in the future.

I recently got a behind-the-scenes look at AI writing assistants for researchers, and was blown away. Not only can these tools write, translate and edit for you, but they will soon be able to tell you where in your sources they found the answers.

We’re rapidly accelerating towards a future where academic articles may be largely written by AI. If you’re scoffing at this reality, then take into consideration the newest wave of features: “AI detectors”, designed to evaluate what percentage of a written piece was based on AI, with the destiny of allowing educators to determine how much of a paper can be written by AI (10%, 40%, 100%). But this cat-and-mouse game will no doubt quickly evolve, as even more advanced text generation will make AI detection harder.

What does this all mean for the modern researcher? The one who seeks to truly understand their topic, create high-quality research, and take ownership of our collective knowledge? With tools that replace each part of the research process, this idea of a researcher may not be around much longer.

In this edition, we dive into the topic of using AI in your academic writing, the powerful features available, and the key reasons why we should and should not use it.

Learn the most essential skills and AI tools for your research:

The Future Ready Scholar Conference

With 4 expert speakers over 2 days, this is a crash-course for accelerating your research while upholding your academic integrity.

AI writing assistants today

If you’re still playing catch up, you’ve entered the space at a great time. Today, there are several remarkable AI writing assistants, with varying degrees of automation. There are many tools in the field for academics, like Jenni AI and Grammarly. But, keep in mind that many of these tools simply use GPT tech.

Try out these tools and you’ll quickly feel that you’re optional in the writing process. Today, AI can make a draft of your paper, generate citations, and even change the tone or style to your liking.

These benefits aren’t just handy, but significantly alter the writing process. It’s no wonder an increasing number of researchers rely on these tools as a part of their process. One evaluation found that 17% of computer science papers and 6% of math and Nature papers contain AI-generated text.

But, collectively, we seem to have bypassed the should and moved quickly onto the how. You’ll find countless articles and workflows on incorporating these tools into useful workflows, but little to no attention regarding the long-term implications of these tools.

Long-term objectives matter. There’s increasing evidence that excessive reliance on these assistants can lead to a very different world, and one we may not recognize.

Why we should not use these tools

Despite the prevailing popularity of AI in research and writing, some scientists are still skeptical, and there are good reasons why. Some worry about dubious quality from AI generated text. Others are concerned that their content may be flagged by AI detectors (although this can be increasingly avoided by using AI detector evaluator tools). Some may be trying to follow the guidelines of their institution or journal.

Although these acute reasons demand attention and consideration, it’s the long-term unknowns that may pose the greatest risk.

Decline in research quality

The main argument goes something like this: When increasing amounts of text are AI-generated, a certain standard is created in overall writing. Researchers who use AI writing assistants can produce more papers faster, and thus have more opportunities to submit their work. This ultimately leads to more and more AI-based text, until it is a majority of written text.

On the face of it, this wouldn’t be a problem. However, there are issues with AI-based text that makes this an undesirable future. Publishers, in general, fear a future of low-quality work. Academic publications aren’t alone. This is a prevalent fear among all forms of writing including fiction. Moreover, there’s a realization amongst some writers that although AI can help improve bad writing, it sometimes makes good writing worse.

Decline in critical thinking

A growing concern about AI tools is how they compromise essential skills like creativity, critical thinking and writing. For example, one recent study found LLMs interrupts the depth to which a student explores a field. Another recent study from a survey of over 300 knowledge workers also had some stark findings when it came to critical thinking and AI. Those with a higher confidence in generative AI were associated with less critical thinking.

Although these studies are not specific to academic writing, the takeaways are likely relevant across all uses of AI in the future. As we grow more reliant on these automated tools and more confident in their capabilities, we may face a corresponding decline in our critical thinking skills, since we can outsource them.

Decline in academic integrity

Perhaps one of the most common criticisms of AI today, particularly in higher education, is the risk it poses to academic integrity. The majority of news articles on ChatGPT argue that it facilitates cheating and plagiarism. Teachers have to rapidly adapt assignments in order to contend with the fact that most assignments and exams can now be easily accomplished using the tool.

This issue is just as serious, if not more so, at more advanced levels of research. When graduate students or even senior academics today use such tools to automate the majority of all tasks, how much ownership will we still take in our work?

Why we should use these tools

Although there are plenty of intimidating risks and challenges surrounding AI in writing and research, there are just as powerful reasons to use these tools. Below are two of the most persuasive arguments, as well as guidelines on how to use generative AI for responsible academic writing.

Enhance research writing

Anyone who has played around with ChatGPT or AI writing assistants knows the feeling of relief when a well-formulated piece of text pops out based on a few jumbled thoughts. That efficiency and improved productivity is just the tip of the iceberg when it comes to improving our writing with automated tools.

When starting a paper, particularly from scratch and/or when dealing with writing in foreign languages, writing assistants are powerful aids. This is particularly true when outlining and drafting, as well as for later steps in the process like grammar and syntax checking. Additionally, researchers struggling to write in a non-native language can easily make their writing more coherent, clear and accurate.

Remain competitive in the future

Regardless of the results of the ethical debates taking place, AI tools will continue to be used, and at ever-increasing rates. This 2024 paper found an explosion in particular kinds of words in publications from PubMed since the advent of widespread LLM use. Although we don’t need any more evidence, it’s a clear signal that many researchers today are already using AI in their academic writing. Moreover, future reliance on these tools likely won’t abate, but can become harder to spot as technology and careful usage improves.

So, how can you remain competitive in a world where all your colleagues and peers are automating their work? Consider the common hook we read across the Internet: “If you’re not using ___, you’re falling behind.” Replace the blank with virtually any AI tool, and you’ll find plenty of results.

Although this alone isn’t a compelling reason to use these tools, it’s an observation of the natural human tendency to experiment and quickly adapt to a new status quo.

How to use AI for writing: Important guidelines

Most researchers today seek to use AI tools consciously by understanding how they work and using them for parts of the process that are best to automate. Now that a few years have passed, there are a number of available guidelines, rules and regulations for the usage of generative AI in writing.

New guidelines are continuously coming out for academic writing with AI, which provide useful tips and ethical considerations. This 2024 set of guidelines recommends these three principles:

Human vetting and guaranteeing: At least one author takes responsibility for the accuracy of the article

Substantial human contribution: Each author significantly contributes either in concept, analysis and AI-usage editing

Acknowledgement and transparency: Authors confirm usage of LLMs

Since many journals have different rules, some researchers seek to standardize AI-related guidelines. For example, these authors are attempting to compile a set of consistent guidelines for using ChatGPT in research that could become universal.

In the meantime, it’s best to confirm with the journal or venue you’re submitting to, as well as to review the latest ethical guidelines available online.

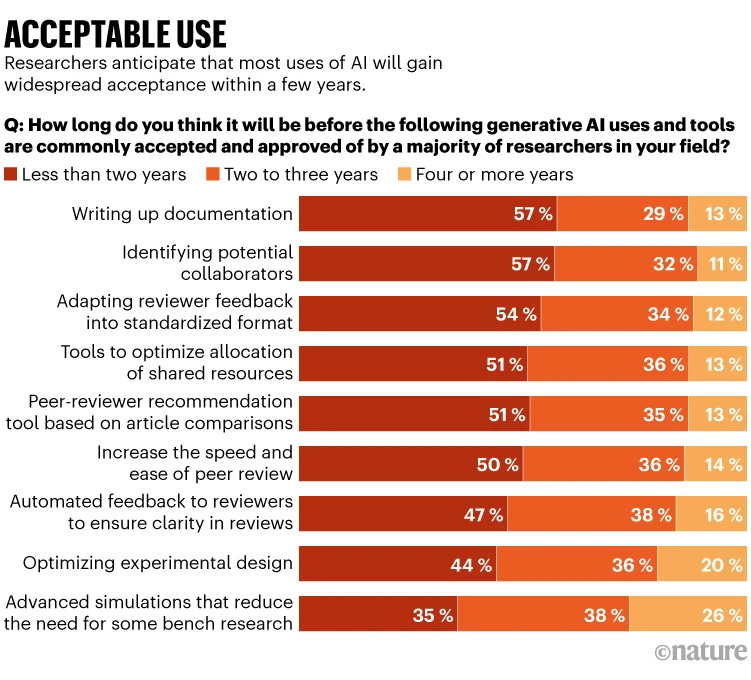

It’s a tricky game in today’s rapidly evolving research environment. You want to use the newest technology to remain competitive, but you can’t compromise your scholarly vigor or academic integrity. The good news is that the vast majority of researchers today are optimistic about AI. According to the latest surveys, 94% of researchers and 96% of clinicians think AI will help accelerate knowledge discovery, and most are happy to rely on these tools (so long as they’re backed by trusted content).

Although journal guidelines offer some guard rails for usage, they deal primarily with the acute and short-term implications of AI in academic research and writing. When it comes to long-term concerns about a decline in critical thinking, innate writing skills, or academic integrity, we simply don’t know.

How do you responsibly use AI in your writing? What guidelines do you use, whether personally or institutionally? Share your thoughts with the research community in the comments below.

Resources

Is AI making us stupider? Maybe, according to one of the world’s biggest AI companies, Feb 2025

Guidelines for ethical use and acknowledgement of large language models in academic writing, 2024

The promise and perils of using AI for research and writing, 2024

Why AI Makes Bad Writing Better but Good Writing Worse, 2024

Most Researchers Use AI-Powered Tools Despite Distrust, 2024

Delving into ChatGPT usage in academic writing through excess vocabulary, 2024

Hi Marina — I really enjoyed your post “AI Can Write Your Paper, But Should It?” 👏 The way you balanced ethics, creativity, and research integrity was both insightful and refreshing — especially your nuanced take on how AI can support, rather than replace, human reasoning in academia.

At Scifocus.ai, we share a similar vision. Our platform focuses on AI-assisted academic writing that upholds originality and supports independent research growth — ensuring scholars can write with AI, not because of it.

We’d love to explore a small collaboration opportunity — perhaps adding a Scifocus.ai backlink in one of your related posts or contributing a guest blog that complements your themes. If you’re open to it, we’d be happy for you to suggest a few suitable posts, or we can identify some ourselves for your review.

Your writing genuinely resonates with the academic community — would love to connect further!

— Connor Wood

Outreach Coordinator | Scifocus.ai

📧 woodconnor245@gmail.com

🌐 www.scifocus.ai

A "killing" cocktail in that event, with those names! A must-have! Thank you, Marina!